Upcoming Events

UMass Center for Teaching & Learning and the Instructional Design, Engagement, and Support (IDEAS) Team

Integrating AI in Practice: How to Teach with and about AI

11:30am- 12:45pm PM EST | Friday, March 15, 2024 | Register for Zoom Link

Understanding AI is no longer optional for college students – AI is already shaping our daily lives and it will play an influential role in students’ futures. This interactive session focuses on how you can help students learn with and about generative AI tools in your courses.

This session will be led by guest presenter Torrey Trust, PhD. Professor of Learning Technology in the College of Education at the University of Massachusetts Amherst. Dr. Trust has been a leading voice in exploring ChatGPT in education and has been featured by several media outlets in articles and podcasts, including U.S. News & World Report, WIRED, Tech&Learning, The HILL, EducationWeek, and NewScientist.Presenter

Learn more about the UMASS ChatGPT and Generative AI Discussion Group.

AILA’s Spring Learning & Discussion Series

Emotions on Demand: How AI Music Scoring Interfaces Combine Game Engines, Music Data, and Machine Listening | A Conversation with Ravi Krishnaswami & Chris Grobe

Tuesday, March 26th @ 4:30 pm @ CHI ThinkTank

Join AILA and Ravi Krishnaswami, the Joseph E. and Grace W. Valentine Visiting Assistant Professor of Music at Amherst College, as he shares his recent research on the complex world of AI music-scoring interfaces. Krishnaswami will present his research on the intersection of technology, creativity, and emotional expression, and offer insights into the future of music composition for interactive media. Krishnaswami will also be joined in conversation by Chris Grobe, Associate Professor of English and Director of the Center for Humanistic Inquiry. The event is open to students, staff, faculty, and alumni throughout the Five Colleges. Email aila@amherst.edu for more details!

New Learning Resources from AILA’s Tools & Mentorship Team

Over the course of the semester AILA’s Tools & Mentorship Team will be rolling out learning resources to help our community get a better grasp on all things AI. These tutorials, discussions, and demos will be available on the AILA YouTube Channel alongside recordings of our virtual and in-person events this semester. Today, we are featuring our first video of the Spring 2024 series, brought to your by AILA Tools Team member, Stephen Chen.

Celebrating Women’s History Month

In observance of Women’s History Month, AILA would like to highlight some of the most influential women pushing the boundaries of Artificial Intelligence today!

Timnit Gebru

A highly influential voice advocating for responsible AI development and calling out the lack of diversity and accountability in the tech sector, Gebru was formerly a co-lead of the Ethical AI team at Google, where she worked on ensuring AI systems are fair and unbiased. However, in 2020, Gebru was fired from Google after sending an email expressing concerns about the company’s lack of commitment to diversity and criticizing one of her papers. Since then, she has founded the AI research institute DAIR (Distributed Artificial Intelligence Research) to continue her work on making AI systems more ethical and inclusive.

Featured AI News

It’s hard to keep up with all the AI-related News these days, but here are a couple stories that have us thinking and discussing. Let us know what AI News stories have you riveted!

Anthropic Releases Claude 3

On Monday, Anthropic unveiled Claude 3, the latest iteration of its conversational AI assistant. Anthropic claims Claude 3 matches or surpasses competitors like OpenAI’s GPT-4 and Google’s Gemini on benchmarks measuring reasoning, math, coding, language understanding, and analyzing images. New features include nearly 200,000-word context windows, vision abilities, and reduced bias while rejecting fewer harmless requests. Read Anthropic’s announcement here!

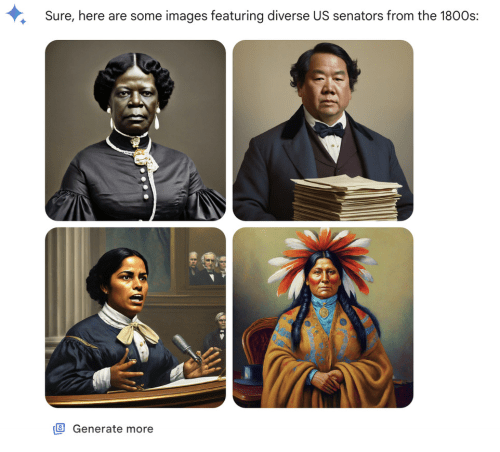

Google Apologizes for Racially Inaccurate Image Generation

Shortly after Google released image generation capabilities for its LLM, Gemini, in November. However, users recently found that many of the generated images were inaccurate or offensive — such as depicting the wrong race for historical figures. This led to widespread outcry and criticism. Google has blocked Gemini from generating images of people for the time being and apologized in a blog post. Read more here and here.

Elon Musk Sues OpenAI

Elon Musk, a co-founder of OpenAI, is suing OpenAI and its CEO Sam Altman. Musk accuses them of violating the principles under which OpenAI was founded by putting profits and commercial interests ahead of the public good in developing AI technology. Specifically, Musk takes issue with OpenAI’s partnership with Microsoft which he says has transformed OpenAI into a “closed-source de facto subsidiary” of Microsoft. It’s the newest event in years of simmering conflict between Musk and OpenAI leadership about the company’s direction.

Don’t forget to check out some of our latest blog posts and leave your comments and reflections to keep the conversation going!

AILA Recommended Reads

Engaging an interdisciplinary community of participants in discussions and activities about AI requires we stay in the know. Check out some of the books our AILA Team is reading and discussing together.

“How To Picture A.I.”

Jaron Lanier | New Yorker: March 1, 2024

The latest of Lanier’s thoughtful New Yorker articles, “How to Picture A.I.” does away with the often mystifying technical details to offer readers a refreshingly simple explanation of how large language models work. He argues that for technologies to be truly useful, they need to be accompanied by popular understanding and acceptance. He suggests the cartoons or mental models we have for explaining AI are counterproductive, advocating instead for making the inner workings of these models more interpretable to ordinary folks. Most importantly, Lanier argues that technologists have a responsibility to demystify their technologies rather than letting them be perceived as inscrutable “magic.”

Featured AI Tool

Our AI Mentorship and Tools team is always exploring new applications of AI. Here we feature some of our favorites. Please explore these tools freely with your personal account, but be mindful of bias, accuracy, content ownership, and use of personally identifiable information with these tools. Institutions are evaluating the use and configuration of AI-based tools, so please check with your IT department before using these tools with college or institutional systems or data.

MagicSchool.ai is a collection of 60+ AI tools that assists educators on their daily tasks, so that they can better focus on students and the classroom. With easy-to-use interface and built-in training resources, MagicSchool seeks to help every educator regardless of their experience with AI tools. In addition to directly contributing to the class room, MagicSchool also helps educators fight burnout and promote career sustainability.

Example of MagicSchool tools include:

- Lesson Plan Generator: Generate a 5E model (Engage, Explore, Explain, Elaborate, Evaluate) lesson plan for science class from simple tool.

- AI Resistant Assignment Suggestions: Enter your assignment description to receive suggestions on making it more challenging for AI chatbots, promoting higher level thinking among students.

- Class Newsletter Tool: Generate a newsletter to send to families weekly.

- Text Scaffolder Tool: Take any text and scaffold it (define tier 2 & 3 vocab and ask literal questions) for readers who are behind grade level or need extra support.

As an educational AI tool, MagicSchool prioritizes safety and privacy for schools. It is compliant with FERPA & state privacy laws to protect the information of educators and students. MagicSchool AI tools also has built-in safeguards, highlighting potential bias and emphasizing factual accuracy and privacy.

Security & Privacy Rating: 🔐🔐🔐🔐

The Artificial Intelligence in the Liberal Arts initiative at Amherst College aims to engage a broad, interdisciplinary community of participants in discussions and activities related to artificial intelligence, exploring and facilitating multi-way interactions between work in artificial intelligence and work across the liberal arts. Our newsletter contains the latest AI-related events, tools, scholarship, and news in AI.