“Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last, unless we learn how to avoid the risks.” — Stephen Hawking

Upcoming Events

AILA Tools Team Demos SCISPACE; 11/29 @ 10a in Science Center Atrium

The Mentorship and Tools team will be popping-up to demo SCISPACE, an AI search engine, paraphrase and citation creator designed for academic research. Come visit the Tools team for one-on-one help with specific projects, setting up the tool on your laptop, or how learn how to use AI in your projects. Read more about this incredible mentorship opportunity here.

The ChatGPT Café is back!

In partnership with Amherst Information Technology, AILA is hosting another round of pop-up labs to offer members of the Amherst community the chance to experiment with the latest version of ChatGPT4. We believe that in order to participate in critical conversation around the use and development of Generative AI technologies, you must experience them for yourself! Come join us in the Frost Library Cafe to explore and reflect on what the most powerful version of ChatGPT4 can do. We will have activities and information on hand to help you make sense of your experience.

Upcoming dates are listed below:

- Thurs, Nov 30 11a-1p (Frost Café)

- Tues, Dec 5, 12p-2p (Frost Café)

- Thurs, Dec 7, 12p-2p (Frost Café)

To learn more about the ChatGPT Café or propose future dates and times for your department click here!

Hack the Herd 2023: A 24-hour Problem Solving Event

December 1-2; 5p-5p; Science Center, Amherst College

n partnership with the Office of Sustainability and Amherst Computer Science Club, AILA presents our first-ever sustainability-focused Hackathon.

Hack the Herd is a 24-hour problem-solving event where participants from the all across the Five Colleges join forces to solve sustainability problems at Amherst College. Twelve Teams have registered to use all their knowledge and skills to generate solutions to sustainability-related problems using real-life technologies and tools! Our Hackathon will kick off on Friday, December 12/1 @ 5p in Science Center A131. Winners will be announced on Saturday, December 12/2 @ 6:30p in Science Center A131. Stop by to cheer the teams on and to talk AI and creative problem solving with folks from AILA!

ChatGPT, One Year Later

December 6; 4:30-6p; CHI ThinkTank, Amherst College

In collaboration with the Center for Humanistic Inquiry (CHI), AI in Liberal Arts (AILA) is helping to convene a panel of three professors exploring the many developments that are reshaping our shared sense of generative AI and of its potential impacts on higher education. This conversation will build on a discussion initiated last year and we welcome members of the Five Colleges community to come share their ideas!

The panel will feature Professors Chris Grobe (Director of the Center for Humanistic Inquiry), Kristina Reardon (Director of the Intensive Writing Program), and Matteo Riondato (Associate Professor of Computer Science) followed by an open discussion. Learn more about the event here. And we hope to see you there!

Featured AI News

I think we can all agree it has been quite the week in AI news. Take a look at some of the stories we dug into to help us make sense of it all. What AI News stories have you riveted?

Understanding 96 hours of chaos at OpenAI.

Between November 17 and 22, generative AI juggernaut OpenAI fired and rehired its CEO, Sam Altman. The exact reason for his ousting remains unclear, but the public is slowly sorting through this crisis of governance. We’ve gathered some solid resources to help you understand what happened. To get you started, here’s a timeline, a retrospective, and an explainer. Also, The New Yorker’s

Joshua Rothman has an interesting essay on the chaos. Finally, here’s a Reuters article on the rumored Q* (pronounced “Q- star”) project that may have contributed to the debacle.

18 countries publish agreement to make AI ‘secure by design’

A group of 18 countries — including the US — released an international agreement to make AI secure by design. It provides guidelines for those developing and implementing AI so that systems “function as intended, are available when needed, and work without revealing sensitive data to unauthorised parties.” According to one U.S. official, it is “an agreement that the most important thing that needs to be done at the design phase is security.” However, the document has no real teeth, and avoids difficult questions around data collection and appropriate uses of AI. Read more here, or the full agreement.

Exciting New Partnership

AILA & the Berkeley AI Safety Initiative for Students (BASIS) Bring New AI Mentorship Opportunities to the Five Colleges

In partnership with UC Berkeley’s AI Safety Initiative for Students (BASIS), AILA is recruiting both mentors and mentees to participate in the Supervised Program for Alignment Research (SPAR). SPAR recruits researchers and safety experts from top universities (e.g., Stanford, UC Berkeley, Georgia Tech, UMass) to tackle contemporary AI safety and alignment issues with the help of student mentees. If you are a researcherwho needs research support or a studentwho wants real-world project experience in AI alignment and safety, please contact us today!

What is AI alignment?

AI alignment and safety encompasses a broad range of research areas, including control mechanisms, understanding the inner workings of AI systems (mechanistic interpretability), reducing biases in AI systems, algorithmic fairness, and learning from human experience (inverse reinforcement learning). Click here to explore recent SPAR projects. Help us bring this exciting opportunity to the Five College community!

AILA Recommended Reads

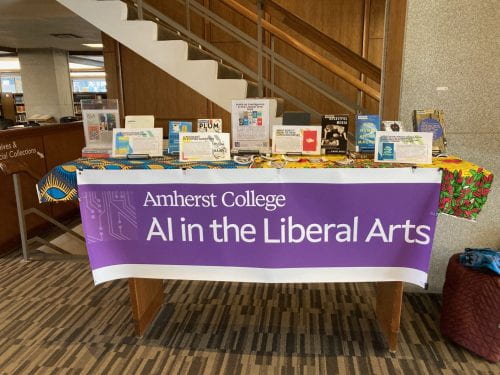

Engaging an interdisciplinary community of participants in discussions and activities about AI requires we stay in the know. Stop by the Frost Library (Amherst College) to explore our top AI book picks!

Starting December 1st, the AILA Team will have a display of our top Recommended Reads in Frost Library! Stop by to check out our favorite Artificial Intelligence texts, including works of Fiction, Social Science, and Philosophy! There is something for everyone. Stop by to pick up an AILA bookmark, read about our book picks, and enter our contest to win a $20 Amazon gift card!

Featured AI Tool

Our AI Mentorship and Tools team is always exploring new applications of AI. Here we feature some of our favorites. Please explore these tools freely with your personal account, but be mindful of bias, accuracy, content ownership, and use of personally identifiable information with these tools. Institutions are evaluating the use and configuration of AI-based tools, so please check with your IT department before using these tools with college or institutional systems or data.

Todoist is a productivity and task management tool with basic yet effective built in natural language processing (NLP) features. Simply write the task you have in mind and the date or dates you’d like it to be due on and Todoist will use NLP to instantly assign a date and time to your request — for example, “every other sun morning” will automatically assign the task to repeat and remind you. Moreover, your data and calendars from other platforms can be easily transferred into Todoist if you’d like to test it out or commit to a full switch! Todoist also has an amazingly concise UI and features strict personal data collection and security policies such as opt in/out data sharing, 256 bit encryption, and transparent metadata download and deletion options. Try it out here!

Security and Privacy Rating:

Cost: $5 per month, free tier available.